Drafted using Notion.

Recently, a study by science and innovation economist Caroline Fry and her colleagues at the University of Hawaii-Manoa in the United States sparked a debate among academics in Indonesia.

ecosystem (Irawan et al., 2023)

The study, published in the journal Research Policy (2023), evaluated the impact of the research productivity assessment system, particularly the SINTA platform in Indonesia.

Fry and her team analyzed data from around 200,000 researchers registered on SINTA, along with their publication track records in Scopus-indexed journals, a popular research database.

They found that overall, research productivity in Indonesia increased by almost 80,000 publications during 2016-2019, or the three-year period after SINTA was announced.

Even after considering external factors that could have triggered the publication increase trend before SINTA in 2016 – from journal article publishing obligation regulations for graduation to publication rules as a prerequisite as the basis of incentives payment for lecturers and researchers – this research still found an annual productivity increase of 25% compared to the control sample of researchers in Thailand and the Philippines.

With these findings, Fry and her colleagues recommend SINTA as an easy and low cost way to support research productivity, especially in developing countries.

However, this conclusion has been criticized by some Indonesian academics.

Although SINTA has been proven to increase productivity, the system created, according to Indonesian academics, actually reinforces a research culture that prioritizes speed and quantity over quality.

In order to “maximize” their SINTA score, for example, Indonesian researchers have been focusing on non-journal publications with weak and less robust peer review, such as conference proceedings. In fact, according to Fry et al.’s research findings, conference proceedings contributed the largest portion – more than 60% – of the increase in publications from 2016 to 2019.

“Why do they prefer to strengthen this citation-based metric? Actually, their opinions are not new. They also forget the more important message to practice good science,” said Dasapta Erwin Irawan, a geology lecturer at the Bandung Institute of Technology (ITB).

“This […] is related to research integrity.”

Expert in ‘Outsmarting’ the System

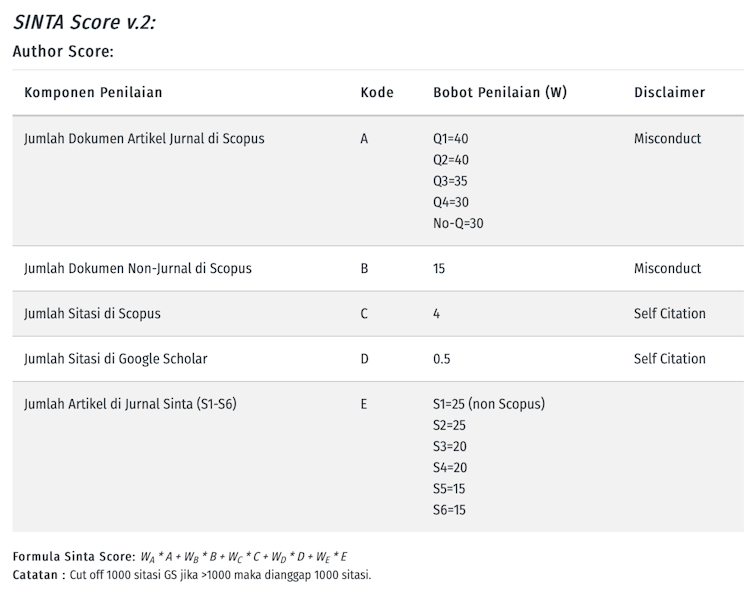

In his research, Fry dissects the ranking method of SINTA research performance with the following formula:

SINTA Score v2.0 Formula. (SINTA – FAQ Page)

During the trend of increasing research productivity of Indonesian researchers from 2016-2019, the research team found that reputable (Q1-Q2) journal articles contributed the smallest increase in composition, which was only 14%.

The biggest contributor was conference papers, which are included as non-journal publications indexed by Scopus, with a contribution of 62%.

“In general, proceedings are non-peer-reviewed scientific works. So their function is more as a collection of papers published in the context of conferences or academic workshops. However, in our country, they are often used as a means to expedite the quantity of publications,” said Ilham Akhsanu Ridlo, a public health lecturer at Airlangga University (Unair), Surabaya.

According to some authors of The Conversation Indonesia, for example, the common practice is that publishers of foreign conference papers affiliated with Scopus conduct reviews that are unrelated to substance – for example, only related to layout and style.

Sometimes, according to the authors, the publication process does not involve the same level of peer review as journal article and is only relied on the willingness of the event organizer.

Referring to the SINTA formula, with just three Scopus-indexed conference proceedings (weighted 15), Indonesian researchers can already achieve a higher score than Q1 journal articles (highest rank with a weight of 40).

Even among the most reputable journals (Q1-Q2), Fry and colleagues found that Indonesian researchers recorded a higher increase in Q2 journal publications than Q1.

This is likely because under the SINTA scoring system, both Quarter 1 (Q1) and Quarter 2 (Q2) journals (according the Scimago Journal Rank) are given the same maximum score of 40, although their reputation and quality are slightly different. With this system, researchers tend to choose to publish in Q2 journals because the publishing process is relatively less stringent than Q1 journals (note: but this is not always the case).

Fry and his team even acknowledged that this evaluation system can change the behavior of researchers to be able to “maximize” their scores.

What has been missed in the discussion

Ilham believes that actually SINTA is not entirely bad and can still have an important role.

However, its role is only as an evaluation tool for academics personally or institutionally.

“For example, as a lecturer, I can see my achievements. ‘Wow, why was I productive yesterday, what needs to be improved,’ then the research areas that I have not reached yet, who cites my research,” said Ilham.

“It is more of an evaluation function like that, not as a tool for comparing individuals and institutions because the resource conditions are not the same.”

The problem is, instead of being a monitoring and evaluation tool, Erwin sees SINTA in practice evolving into a ‘gatekeeper’ that greatly influences many regulations, processes, and evaluation systems for lecturers and academic institutions in Indonesia. A new work can only be claimed by a lecturer if it appears on the SINTA platform.

Achievements in SINTA are related to a number of regulations and platforms that are included in the Promotion Assessment (PAK) which affects the promotion, Lecturer’s Workload which reflects lecturers’ performance in each semester and also the sum of their incentives, and also to the cluster of the universities which affects institutional autonomy to conduct research.

As a result, according to Erwin, many lecturers end up practicing research quality sacrifices and looking for shortcuts to publishing – even getting caught up in publisher’s “predatory” practices – because they are driven by the fact that their achievements in publication metrics greatly affect their careers, fate, and welfare.

According to academics, the publication climate in Indonesia, which focuses heavily on publication metrics, has encouraged not only the emergence of questionable conference organizing industries that boost proceedings publication with weak peer review, but also the practice of self-citation to increase citation rates, as well as the use of publication brokers to meet the increasing demand for publication in reputable international journals.

“Well, all of this is for promotion purposes, even for graduation, and also for annual performance assessment. This has become an important component of lecturers’ workloads,” said Erwin.

“Why does the assessment system trap or encourage researchers to ‘trick’ the system? So, if we look at it, it’s not just about SINTA, but more about the regulations as a whole. That should be the main focus of criticism,” concluded Ilham.